Imagine all the things that human sight is capable of, and you can begin to realize the nearly endless applications for computer vision. This detailed piece covers the scope and potential of computer vision, elaborated with 47 examples of computer vision applications across six different industry segments.

It’s no more a secret that data science has taken over all industries leaving no stone unturned. All businesses try to harness a wide variety of data at each step of their operations for maximum efficiency.

It only makes sense for people to become familiar with at least basic algorithms and tools for analyzing data in their respective domains. It will lead them to understand trends better and, in turn, make better decisions.

Sounds a bit too farfetched? Let’s not just beat about the bush here and resort to some credible research. For instance, according to this one published on PRNewswire:

The global computer vision industry generated $9.45 billion in 2020. And experts predict it to generate around $41.11 billion by 2030, indicating a whopping CAGR (Compound Annual Growth Rate) of 16.0% for the stated period.

What is Computer Vision?

Before diving deep into what are the applications of computer vision, it’s imperative to first under the computer vision.

The term computer vision is an area of artificial intelligence and computer science that teaches computers to “see” and understand the content of digital images. In other words, the goal is to provide computers with a visual knowledge of the world.

Computer vision and its applications involves the automatic extraction of information from images, often mimicking human vision. It comprises a combination of programming, modeling, and mathematics, making this field of study very attractive.

How Does Computer Vision Work?

Today, almost everything around us creates digital content. Many everyday devices and gadgets capture images and videos, from small sensors installed on supermarket doors to more sophisticated smartphones.

It is anticipated that the analysis of such digital content across all sectors may provide a lot of useful information. One way to achieve this is to have such an analysis done by people, which means great time, energy, and effort investments.

However, there is a smart alternative to reaping the benefits optimally, i.e., by resorting to the prowess of machine learning (ML) and deep learning (DL). The task is to apply ML and DL to digital images and videos to classify (recognize) the objects that appear in them.

Computer vision and its applications comprises these basic steps:

Acquisition: The acquisition is exactly what it sounds like: acquiring an image that needs to be handled by a computer vision algorithm. They can come from many different sources, including cameras integrated into mobile devices or computers themselves. The explosion of modern smartphones has become an increasingly popular way to generate visual data sets.

Processing: Processing means any computer work performed on image data before acquisition. This can be for different purposes, for instance, resizing the image before applying the algorithm. You can also crop or rotate the images before exposing them to the algorithm.

It’s a common step for algorithms designed to work with images of specific sizes, so this process ensures that the input is always in the correct format.

Segmentation: Segmentation is the division of an image into several segments so that they can be analyzed separately. For example, suppose you want to form an algorithm to detect apples in images. Suppose you insert your dataset into an algorithm without prior segmentation.

In that case, the result will probably be a long list of all the apple cases found in your dataset – some correct and others very wrong. However, if you choose to segment each instance as a single apple, each apple will receive an entry in the case list.

Feature Extraction: Feature extraction refers to transferring data first into something that can be inserted into an algorithm. For example, if you are looking to form an algorithm on images that contain apples, it would be pointless to give the algorithm a simple description of what an apple looks like.

More information would be needed! Instead, try to extract features from the images themselves so that they can be labeled with descriptions of the type of fruit they are coming from (e.g., apple = “red”, “red”, etc.).

Classification: Classification is one of the most critical steps in computer vision. Classification is when data is assigned to a label based on the extracted functions. The classification may, for example, include the distinction of red tomatoes from green or yellow tomatoes.

Classification is generally done using statistics combined with machine learning. For instance, if a trait proves to be highly reliable in predicting the type of object in demand (e.g., red = tomato), it will carry more weight in future rankings.

Result Aggregation: Aggregating results simply means collecting and organizing the results of each classification so that they can be used by the people searching for them! One of the main advantages of a computer vision system is that it can process massive data files much faster than humans.

Therefore, these organizations deliver their results through APIs that application developers can integrate into their applications or websites.

Examples of Computer Vision Applications Across Different Industries

Computer vision gives machines a sense of sight – allowing them to “see” and explore the world through Machine Learning and Deep Learning algorithms.

This powerful technology has quickly found application in many industries and has become an indispensable part of technology development and digital transformation.

But how do companies actually benefit from the use of computer vision?

In the section below, we have compiled a list of the most famous examples of computer vision applications in key industries:

1. Computer Vision Applications in Manufacturing Sector

The manufacturing industry is adopting various automation solutions as part of Industry 4.0 – a new manufacturing revolution. To change the way products are manufactured in industrial automation, the manufacturing industry is adopting various advanced technologies such as artificial intelligence (AI), machine learning (ML), the Internet of Things (IoT), computer vision, robotic technology, etc.

Specifically, computer vision has come to the fore and has revolutionized various segments of the production process with its intelligent automation solutions. Some of them include:

i) Product Assembly Automation

Computer vision applications play a significant role in collecting products and components in the production area. As part of Industry 4.0 Automation, most industries embrace computer vision to implement fully automated product collection and management processes.

For example, it is well known that nearly 70% of Tesla’s manufacturing process is automated. The 3D modeling design is generated by computer-aided software.

The computer vision system accurately controls the assembly process based on these designs. Computer vision systems monitor and guide robotic arms and assembly line personnel.

ii) Defect Detection

The computer vision system can identify multiple defect categories, improve image processing and detect digital error certificates.

The manufacturing industry often strives for 100% accuracy in detecting defects in its manufactured products, as they require micro-scale defect monitoring systems (such as monitoring of defective fibers).

Finding these defects at the end of the manufacturing process or after delivery to the customer can lead to higher production costs and customer displeasure. These losses are relatively much higher than the cost of implementing an artificial intelligence-driven computer vision detection system.

The computer-driven application collects real-time data from cameras and machine learning algorithms, analyzes data flows, detects deficiencies based on predefined quality standards, and provides percentage deviation.

Based on this data, delays in the production line process can be monitored. It allows the production process to run flawlessly and efficiently. Remember, “The cost of failing to detect a defect is much higher than detecting a defect.” Investing in a computer vision-based error detection system can be a cost-effective solution.

iii) 3D Vision System

The computer inspection system is used to fulfill the set obligations in the production line. In this case, the system uses high-resolution images to build a complete 3D model of the components and their connecting legs.

As the components move through the production unit, the computer’s visual system captures different images from different angles, creating a 3D model.

When combined and executed using AI algorithms, these images identify any flaws or minor deviations from the design. This technology is highly credible in manufacturing industries such as automobiles, electronic circuits, oil and gas, etc.

iv) Computer Vision-Guided Die Cutting

Here you go with another of the applications of computer vision. Rotary and laser cutting are the most commonly used cutting technologies in production. The rotary uses hard tools and steel blades, while the laser uses high-speed laser light. Although laser cutting is more accurate, cutting hard materials is challenging, and rotary cutting can be used to cut any material.

The manufacturing industry can implement computer vision systems to perform rotary cutting as accurate as laser cutting for any design. Once the design model is embedded in the computer’s visual system, the system makes a breakthrough, laser or rotary, to make a precise cut.

v) Predictive Maintenance

Some manufacturing processes take place at critical temperatures and environmental conditions. So, material degradation or corrosion is common. It leads to the deformation of the device. If left untreated, it can lead to severe losses and shut down the production process.

Manufacturers, therefore, employ corrosion protection techniques to ensure the health of the machines and prevent corrosion with predictable maintenance. Manufacturers continuously check the equipment manually. However, computer vision systems can constantly monitor devices based on various metrics.

If a deviation from the metric indicates corrosion, computer vision systems can proactively alert their managers to perform maintenance activities.

vi) Safety and Security Standards

The manufacturing industry’s employees work under hazardous conditions. It dramatically increases the risk of injury. Failure to observe the safety instructions could result in severe injury or death. Manufacturing facilities must comply with safety standards imposed by governments, and companies that fail to comply with these standards will be subject to sanctions.

While manufacturing companies have installed cameras to monitor worker movements in the factory to ensure safety standards, it is usually a manual monitoring process that requires the worker to sit constantly and watch the video stream.

Manual processes are prone to errors, which can have serious consequences. Computer vision with AI can be a great solution. Such solutions can continuously monitor the production site. Even if there is a minor violation, the system can immediately report the relevant manager and employees. Manufacturing companies can ensure that their employees meet safety and security standards.

In the event of an active accident, the computer vision system can alert managers and employees where the accident occurred and the intensity. It can give them ample time to stop the production process in this specific area and proactively ensure the safety of the employees.

vii) Improved Packaging Standards

It is essential to count the number of pieces produced before packing them into a container in some manufacturing companies. Running this task manually can lead to many errors. This problem is more familiar with pharmaceuticals and retail products.

An appropriate computer vision application technology can be a savior in such conditions. Once the items are appropriately packaged, another use of computer vision is to check for damage to the packaging itself.

Products must arrive at the customer safely and on time. Damaged packaging can affect the product from the inside. Computer vision systems can proactively remove any damaged packaging before leaving the station.

viii) Barcode Analysis

Another important aspect is to check the barcode. Most products have a barcode. The packaging department must verify that the printed barcodes are correct and legible. Manually scanning the barcodes of thousands of products takes several human hours, which can be flawed and expensive.

Computer vision systems can quickly check barcodes and redirect products with the wrong barcodes, saving much time and effort.

ix) Inventory Management

Computer vision systems can also help count inventory, maintain inventory levels in warehouses, and automate and alert managers when the material is needed for on-demand production. These systems can prevent human error in the inventory count. It is difficult to find stocks in large warehouses.

Using a computer vision system based on barcode data can help warehouse managers find products in stock much more quickly and conveniently.

2. Computer Vision Applications in Transportation

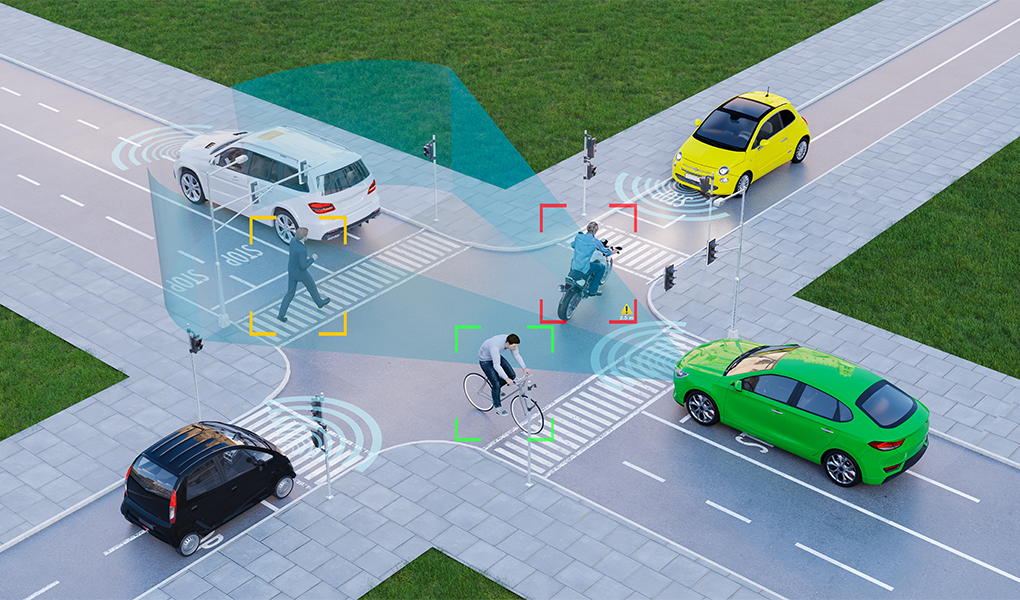

Many aspects of traffic can be successfully addressed by analyzing a large amount of vehicle data and connecting road infrastructure to a seamless information exchange network. The benefits of using artificial intelligence in this market area are not only for cities and drivers but also for carriers, pedestrians and the environment.

It benefits the entire transportation ecosystem, not just one of its components. We should all be interested in developing these technologies and their most comprehensive possible application in transportation.

i) Detecting Traffic and Traffic Signs

If the rules on the road were reduced to a simple rule, which even minors understand, red and green lights would undoubtedly be unrivaled. Meanwhile, hundreds of accidents happen every year involving driving at a red light and not stopping at the right time. Many factors contribute to such an outcome, for instance, driver fatigue, bad weather, phone abuse while driving, or just buzzing and time pressure.

People making mistakes behind the steering wheel is simply inevitable. However, teaching machines to recognize traffic lights and eliminate such errors (BMW and Mercedes made the first attempts) can revolutionize the outcomes. Thanks to this technology, the brake system reacts automatically when the driver tries to run a red light, thus avoiding a catastrophe.

ii) Pedestrian Detection

One of the major factors preventing the mass introduction of autonomous vehicles is the unpredictability of pedestrian movements and actions. Artificial intelligence can easily recognize trees, unusual objects, and pedestrians (thanks to computer vision) and can alert drivers of a person approaching the road.

The problem arises when a pedestrian carries food, keeps the dog on a leash, or is in a wheelchair. Its unusual shape increases the difficulty that the machine can correctly identify a person.

However, it must be acknowledged that the use of various object detection functions – based on movement, structure, shape or transition – is practically 100% successful.

But the intention of the pedestrians remains a significant challenge. Take the road or not? Are they just walking on the side of the road or thinking about crossing? These elements are still ambiguous, and a neural network is needed to predict them effectively.

The method of assessing human behavior is helpful here. Since it relies on the human skeleton’s dynamics, it can predict human intent on the road and streets in real-time.

iii) Traffic Flow Analysis

Noise, smog, clogged city streets, stressed drivers, financial losses, greenhouse gas emissions – congestion and crowds in cities cause several side effects. Artificial intelligence can effectively help you deal with everyone and make transportation much more efficient and convenient.

Because the algorithms are based on in-vehicle sensors, the city’s CCTV cameras and drones monitoring the flow of vehicles can watch and analyze traffic on the highway and in the city. It allows them to warn drivers of possible traffic jams or accidents and effectively manage vehicles’ flow.

It is also always useful for urban planners involved in the construction of new roads and in improving urban infrastructure. Artificial intelligence can identify the best planning solution and help reduce unwanted situations in the planning phase, thanks to the analysis of previous traffic patterns and a large amount of data available.

iv) Traffic Flow Analysis

Noise, smog, clogged city streets, stressed drivers, financial losses, greenhouse gas emissions – congestion and crowds in cities cause several side effects. Artificial intelligence can effectively help you deal with everyone and make transportation much more efficient and convenient.

Because the algorithms are based on in-vehicle sensors, the city’s CCTV cameras and drones monitoring the flow of vehicles can watch and analyze traffic on the highway and in the city. It allows them to warn drivers of possible traffic jams or accidents and effectively manage vehicles’ flow.

It is also always useful for urban planners involved in the construction of new roads and in improving urban infrastructure. Artificial intelligence can identify the best planning solution and help reduce unwanted situations in the planning phase, thanks to the analysis of previous traffic patterns and a large amount of data available.

v) Parking Management

It is often difficult to get to the city center by car and find a parking space. Connecting city parking spaces with an efficient network of sensors that monitor available space, parking time and hours when vehicles are at their highest can significantly improve this vital aspect of traffic.

With navigation-embedded vehicles, AI can make it easier for you to find free parking, warn you of potential traffic jams, and find a car when you forget where you parked it.

Such solutions are handy in places like airports, sports stadiums or arenas, where traffic must be barrier-free, and many visitors can pose a security risk.

vi) License Plate Recognition

A practical application of artificial intelligence and computer vision is license plate recognition. It is the kind of technology often used when driving on highways, tunnels, ships or in confined spaces bounded by doors or barriers.

Artificial intelligence helps check whether a particular vehicle is on the list of registrations that have access to a specific area because of the fee paid or the driver’s condition.

A proven tool in the hands of police and security services is also the algorithmic license plate recognition, which can identify the way to an individual vehicle or check the driver’s alibi.

vii) Road Condition Monitoring

Potholes inflict about $3,000,000 worth of vehicle damage in the United States alone each year. Intelligent algorithms can warn surprising drivers on the road and monitor road conditions, so authorities can pre-warn about potential spots that need repair soon. It can be made possible by connecting the camera to ADAS (Advanced Driver Assistance Systems). It uses machine learning to collect real-time information from the road surface it is moving on.

It helps warn the driver of the damage to the road and wet road surfaces, ice, potholes or hazardous waste from the road. It will improve passenger safety, prevent accidents and save money – both in terms of drivers’ finances and city financing.

viii) Automatic Traffic Incident Detection

Video surveillance has been with us for years. But it becomes possible to detect traffic accidents more efficiently, react faster and provide information to traffic users in near real-time when AI solutions complement it.

We can detect different types of accidents by connecting cameras to the ITS (Intelligent Transportation System) with the help of computer vision technology and equipping vehicles with intelligent sensors.

Intelligent algorithms save lives, prevent serious accidents, warn road users of dangerous situations, and recommend safer travel options.

The most commonly reported traffic incidents include vehicles driving too fast or too slow, pedestrians or animals entering the road, traffic blocking vehicles, recognizing cars driving in the wrong direction, and detecting on-road debris.

ix) Driver Monitoring

Finally, a complete category of artificial intelligence solutions involving the car’s interior and the drivers themselves. Three of them are particularly valuable:

Driver Fatigue Monitoring – The system can detect the driver’s drowsiness and emotions by detecting the driver’s face and assessing the head position, thus avoiding an accident.

Driver Distraction Alerts – For example, when the driver is on his cell phone, turning the car off or turning in the back seat so he can talk to his fellow passengers.

Emergency Assistance Systems – When the driver gets irresponsive and does not drive, the car first tries to wake the driver by braking and pulling the seat belts. If it doesn’t work, the vehicle stops and calls dials emergency.

3. Computer Vision Applications in Healthcare

The way the world perceives and practices healthcare has changed dramatically over the years. Technology plays a significant role in this transformation. It has changed the way we diagnose, treat or even prevent disease.

Artificial intelligence has had a significant impact on this process. One of its subdomains, i.e., computer vision, is more valuable than most. Let’s see how.

i) Improved Medical Imaging

Computer vision applications help reduce the number of invasive surgeries previously needed to diagnose health conditions.

Computer vision software solutions are available to detect abnormalities when scanning vital organs. These include the heart, liver and lungs.

This kind of software can be installed on a standard MRI device. When combined with augmented reality in healthcare, it shows patients’ organs scanned in 3D, which can be monitored on a computer screen.

It provides radiologists with a good picture of the patient’s condition without the need for major surgery. The system could also detect abnormalities that the human eye would not otherwise have diagnosed, which increases accuracy.

ii) Better Diagnostic Applications

Medical imaging has long been an integral part of healthcare. X-rays, 2D ultrasound, etc., are no longer new concepts.

Computer vision goes a step further and helps healthcare professionals make informed decisions about patients’ health.

Some programs use computer vision and 2D images to convert them to 3D format. It helps doctors and physicians to identify problems more accurately.

InnerEye by Microsoft is one such software that can detect tumors and other X-ray abnormalities. Radiologists transfer scanned images to the software. The software then generates surface measurements for various parts of the organs and ligaments shown in the image. The tumor areas are then marked, which can be examined by a radiologist, and a good conclusion can be drawn.

iii) Cancer Screening

Computer vision and machine learning applications have enabled the early detection of cancer.

Certain cancers, such as skin cancer, can be challenging to detect because the symptoms are similar to common skin problems.

Researchers have developed applications that can distinguish cancerous lesions on the skin from non-cancerous lesions. Using neural networks, scientists have created a model with more than 120,000 images of skin cancer.

Experiments have shown that the model can diagnose skin cancer as certified professionals.

Applications for the detection of bone and breast cancer are also being developed. These applications are activated by imaging healthy and cancerous tissues to distinguish them.

iv) Surgery Assistance

Machine learning models are integrated into healthcare procedures to improve success rates and reduce risk during surgery. These applications help doctors better prepare for invasive surgery to reduce the risk of complications.

Gauss Surgicals’ deep learning app, called Triton, can estimate real-time blood loss during and after surgery. Triton processes images of blood sponges, aspirators and other surgical instruments to estimate the amount of blood loss. It helps surgeons determine the amount of blood given to the patient during or after the procedure.

v) Research and Identifying Trends

Research firms may use prior patient examinations to identify developmental trends for a particular disease. These examinations can provide valuable insight into the course of the disease and the response to various medications in specific groups of patients.

These scans and studies can help shorten the time and effort of clinical trials. It can also help you find ways to prevent the disease altogether.

vi) Retention Management in Clinical Trials

The main problem during clinical trials is to verify that the subject/patient is following the correct instructions and prescribed treatment.

AiCure has developed software that allows researchers to monitor patients during clinical trials to address this.

Patients are required to download their app and take the medication in front of the camera through their app. Then the software uses facial recognition to ascertain whether or not the patient has taken the medication with the help of facial recognition technology.

The app also provides the users with reminders to take their medication according to their schedule.

vii) Training

Even the best surgeons need some prep work for critically invasive surgery. TouchSurgery offers impressive and interactive surgery simulations.

Using their DS1 computer, relevant videos were anonymized and uploaded to the cloud. These videos are available on the surgeon’s account on their computer and their mobile application.

The software parses data obtained from the video using computer vision, analyzes it and compares it to the required time to perform the procedure. It helps the surgeon understand how the procedure can be performed more safely and in less time.

viii) Injury Prevention

Healthcare professionals and patients are often vulnerable to preventable injuries or illnesses. People who work in hospitals are almost twice as likely to be injured on the job as the average for all employees.

Computer vision can detect and prevent some of this damage. For example, computer vision can see when someone falls and warn hospital staff of an accident.

Sometimes employees forget to use the necessary safety equipment or sterilize instruments properly. It can increase the number of injuries in hospitals. Startups are working on computer vision systems to detect these errors and remind employees to follow the protocol.

Some hospitals also use computer vision technology to detect fire and smoke faster than traditional detectors.

4. Computer Vision Applications in Agriculture

Computer vision applications enjoy a growing place in agriculture. From improved productivity to lowered production costs with automation, computer vision has improved the overall function of the agricultural sector. Computer vision is starting to gain ground in the agriculture sector with its automation and detection capabilities.

A vision based on artificial intelligence pushes farmers to unattainable levels. Today, labor costs are the highest, and competition is at stake. However, the heritage of agriculture is safeguarded by AI computer vision. The proliferation of computers in agriculture is far-reaching. Thus, the global AI market will grow to around $2,075 million by 2024.

i) Computer Vision for Quality Inspection of Agricultural Food Products

The machine vision for agriculture and food has come together to ensure real-time verification of food quality. In addition, AI technologies offer a non-destructive approach to evaluation. It means that control systems provide the advantages of reliable control. At the same time, they require minimal sample preparation for training.

In addition to quality control, automation systems show excellent results in product classification. The data shows that customers prefer apples with the largest diameter between 75 and 80 mm. But farmers would need to spend hours assessing the size of the fruit. So, computer vision for agriculture is valuable to the customer-oriented market.

ii) Image-based Plant Disease and Pests Detection

Computers are also used in agriculture to identify crop diseases. Tech farmers are using smartphones to diagnose diseases and prevent crop loss.

There are computer vision applications that rely on a public dataset. The training data comprises healthy and diseased plant leaves. Next, experts train a deep convolutional neural network. The training data helps identify crop types and potential diseases. To date, the model created showed an accuracy of 99.35% on a series of tests performed.

Image segmentation and classification techniques are essential aspects of plant disease detection. A genetic algorithm usually supplements them.

The University of Hawaii has made its application available to all computer vision users. The educational institution has launched Leaf Doctor 2014. Now everyone can make a quantitative estimate of plant diseases with their smartphone.

iii) Weed Detection

Weed control is one of the most important ways to improve crop production. But spraying chemical herbicides will do more harm than good. Chemicals often lead to waste and pollution of agricultural land. The consequences are even more severe if they are sprayed to the brim.

Then comes computer vision software. Today, the agricultural industry uses traditional image processing methods with deep learning-based approaches.

This unison allows farmers to address weed detection problems much better. The technology enables farmers to tell crops from weeds and achieve precise spraying.

iv) Soil Sampling and Mapping with Drones

Drones or unmanned aerial vehicles provide multispectral remote sensing of the Earth’s composition. They take advantage of advanced recording technology and remote cameras. This hardware with AI collects data on the electromagnetic spectrum of light. It bounces off the ground below.

Bird’s eye view allows farmers to analyze differences in elemental composition. The data obtained in this manner lays the foundations for ideal seed planting models. It also increases fertilization strategies. In addition, drone-based monitoring can detect changes in leaf color and trunk growth. Drones can also provide accurate data in cloudy conditions.

An important example of a UAV-based soil moisture system is the Monash University project. The mission of the project is to optimize irrigation processes with drone technology. In addition, it helps to reduce unnecessary water consumption.

v) Livestock Management

Farmers are also turning to artificial intelligence for precision farming. Animals can be treated with new intelligent methods. For example, algorithms are formed to determine the species and behavior of animals. A properly trained drone can recognize, count and track pets without human help.

Artificial intelligence-led inspection systems can also collect phenotypic data. It contains critical information about the animal’s live weight, pregnancy status and pedigree. All this contributes to improving the profitability and productivity of the farm.

Remote monitoring of your team can be helpful for other purposes as well. Consider detecting illness, behavior change, or birth. In addition, intelligent livestock management helps determine breeding times and market maturity.

vi) Efficient Yield Analysis

A potent combination of deep learning and computer vision supports crop performance analysis. The automated process replaces long-term manual operations. In addition, it provides farmers with additional information on crop variations and crop health.

Artificial intelligence experts collect a wide range of existing data for machine learning. It can include climatic conditions, soil factors and humidity.

vii) Computer Vision in Grading and Sorting of Crops

Computer vision applications also include a post-harvest chain. It is one of the most exciting applications of computer vision in agriculture. AI systems are used to grade and sort the harvested products. In particular, it provides automated control of crop quality. The data obtained helps to decide which products are available for longer shipping.

This grading also assigns the yield to varying sales channels, such as local markets. From a technical point of view, grading is possible mainly due to traditional image transformation algorithms. Normalization and equalization algorithms are used to organize the visual data. It then helps with the automatic visual inspection.

viii) Phenotyping

Computers in agriculture also do the phenotyping of plants. It is a science that characterizes plants and crops. It is an area of basic knowledge to support decision making in agriculture. Phenotyping also gives farmers accurate data to select the most appropriate genotypes.

Autonomous phenotypic systems offer opportunities for non-invasive crop diagnoses. Artificial intelligence systems collect image samples to determine plant functions. These include height, width, color, and estimated fruit yield. It allows agricultural experts to monitor the development and physiological reactions of crops.

Storage for pre-trained models has also been improved with next-generation models. It introduces new phenotypic frameworks through continuous refinements.

ix) Indoor Farming

Finally, deep learning models are rapidly growing in domestic agriculture. Unlike the conventional ones, an indoor home farm is relatively expensive to install and maintain initially. And intelligent systems help reduce operating costs.

They help regulate the best light intensity and temperature. In addition, inspection systems help to detect crop diseases without expert intervention. And a lot of staff is required to perform these tasks manually.

x) Machine Harvesting

Machines are an economical and efficient way to harvest crops, but let’s not talk about conventional farm equipment like a combine harvester. We’re talking about specialized robots equipped with object recognition technology and deep learning for the autonomous collection of fruits and vegetables.

It is made possible by a combination of two components. First is the grasping part, which is complex and performed by specialized hardware. Then there’s the software or visual element, which identifies objects to ensure appropriate grasping.

Implementing machine vision in agriculture like this serves to accelerate the processing time, thus reducing the involvement of manual labor. The benefits of such arrangements increase manifolds through the harvest seasons when unattended fruits and veggies aren’t plucked and end up decaying on the field.

5. Computer Vision Applications in Retail

Since many sales activities require visual feedback and generate large amounts of data, the growing interest in computer vision among sales companies should come as no surprise. According to RIS’s 29th Annual RIS Technology Survey, only 3% of retailers have already implemented computer vision technology and 40% plan to implement it in the next two years.

Computer vision must address the many pain points of stores and can potentially transform the experience of customers and employees. For example, customer journeys can be redefined so that millions of store design decisions are based on actual data, not intuition.

Thanks to machine learning and a holistic approach to implementing computer vision, the digital transformation of retail is becoming much more feasible. Here are the five best-selling computer vision applications.

i) Visual Search Contributes to Improving Customer Experience

Visual research helps suppliers improve their user experience. It is one of the most popular ways in which deep learning technology is used to enhance the user experience and overcome the limitations of textual product discovery.

Some queries are challenging to describe in words and are best suited for a visual search experience. The comprehensive Grid Dynamics project for Art.com illustrates the benefits of visual text search by helping customers find artwork in a similar style rather than content.

This case study explains how deep learning vectorization models can make it difficult to describe the “latent characteristics” of a painter’s style and then provide research results based on products of a similar kind.

In another example where customers might be interested in seeing a range of similar clothing, Macy’s.com offers a “view more like this” feature.

This feature allows users to find visually similar options categorized to describe hidden features not available in faceted navigation.

Visual search technology is well received by online shoppers and is redefining the online shopping experience.

As modern consumers expect search to be as comfortable and straightforward as possible, the visual search experience has become a must for digital marketers looking to maintain their competitive advantage.

ii) Upselling Opportunities with Product Recommendations

Sellers want to be able to sell to their customers, and customers like the convenience of presenting relevant products while shopping, especially when there is a discount.

The problem has always been figuring out what customers want.

Collaborative filtering algorithms can store large amounts of e-commerce activity data and understand the hidden features that underpin successful consulting. Even for large catalogs with no data on each product, deep learning can be used to provide quality advice relevant to sales-creating users.

iii) Augmented reality (AR)-based Try Before You Buy Feature

Augmented reality assists us in combining real-world aspects (like backgrounds) with computer-generated content, for instance, a product.

Suppose you want to check out how a furniture item or an art piece looks in your living room. AR can place a computer-generated item into the actual image of the room.

You need computer vision to position the digitized item accurately within your room’s settings and rotate it to make it appear totally authentic.

Customers are interested in these immersive experiences, making it convenient to buy something without seeing it in person. Retailers need to adopt the latest features like AR to indulge their potential customers in boosting their revenues.

iv) Fitting Rooms with Magic Mirrors

Magic mirrors are fast becoming the hottest commodity in the retail sector, replacing traditional mirrors. They are increasingly being used for on-premise shopping, combining the original functionality of a mirror with a computer and monitor, opening up infinite new possibilities.

Unlike traditional mirrors, these enable you to see both front and back sides, even simultaneously. It involves both real-time and recorded views, complemented with augmented reality in “trying on” different outfits virtually.

Once tried and chosen, users can resort to the online features of these magic mirrors to buy the outfits they like right from the transaction features embedded into the mirror settings.

v) Automating Categorization Saves Time and Improves UX

Manual categorization of the products costs much time, effort, and resources, still being highly vulnerable to errors.

Computer vision can “view” the products and automatically categorize and catalog them.

Automated categorization can be a real game-changer for businesses with continually changing product inventory types, for example, grocery stores.

vi) Improved Search Accuracy with Improved Product Attribution

The possibility of finding errors in product descriptions, especially in extensive catalogs, is quite high, negatively impacting search and recommendation engines.

Retailers dealing in large catalogs are in dire need of accurate product attribution for better search results. Text searches cannot be as efficient as visual searches and visual product recommendations, increasing the value of visual product attribution for such setups.

vii) Cost Reduction with Better Inventory Management

It is imperative to maintain accurate inventory management because customers get frustrated when they order items only to find out that they are sold out.

A reliable digital point of sale requires an accurate inventory, which requires availability information to be included in search indexes. Advanced computing techniques such as artificial intelligence and machine learning are used to maintain inventory accuracy.

6. Computer Vision Applications in the Sports and Fitness Industry

For the past two decades, coaches have used data science in sports to improve the performance of their players.

They used big data to make split-second decisions on the pitch and off the pitch, i.e., searching for “the next big thing.”

Meanwhile, football referees now use video assistant (VAR) technology, which helps them make more accurate judgements on big decisions such as penalties, free kicks and red cards.

And now that artificial intelligence, especially deep learning, is involved, the sports experience will change even more.

Let’s see some examples to understand all this better.

i) Real-Time Action Management

Experts from the University of Shiraz and the University of Waterloo have done extensive work on the effective recognition of hockey events. Their experts have developed the so-called Action Recognition Hourglass Network (ARHN), a comprehensive multi-component model for visual data processing and computing.

Basically, a complex algorithm takes a piece of motion video content and converts it into a series of images. Another algorithm then analyzes the players’ positions (direct and crossover sliding, pre and post-pitch positions) and sorts them.

These models have been used the longest to deliver the most accurate and fairest results in hockey and other sports.

ii) Efficient Ball Tracking in Tennis and Other Such Sports

Precise tennis ball trajectories have been tracked in the sport for the past couple of decades. Therefore, specialized systems target different objects in the image that resemble a sphere in shape. Once detected, a three-dimensional trajectory is created by connecting a ball-to-frame motion model.

Different camera angles and flexible movement registration are essential here. Its primary purpose is to estimate whether the ball landed in or out of the bounds in a particular shot.

These solutions generate intelligent statistics in real-time for correct refereeing and reliable sports performance analytics.

iii) Training and Development Analytics

Modern sport imposes more demands not only on the athletes but also on the coaching staff. The main advantage of team sports is not so much the presence of “stars” as the proper organization of team games. Evaluating each player’s actions, interaction, and coach is an effective tactic and an invaluable game strategy.

Computer vision in sports analysis is an excellent tool for obtaining objective and up-to-date information when it is not enough to record video from the field. The mathematical video streaming process allows you to determine the position of each player on opposing teams at any given time.

Sports video analysis systems have become a very lucrative business for many sports arenas and clubs. Although creating such systems requires the synchronous operation of dozens of cameras and powerful computing functions, the effort is usually worthwhile in the long run.

iv) Prevention of Life-Threatening Situations

In NASCAR and similar sports, where players can experience life-threatening situations, computer vision is used to promptly detect and prevent vehicle damage. Such systems are really saving lives here.

Usually, such systems rely on comprehensive Big Data-based databases comprising vehicle models to recognize specific cars for their highly detailed analysis during the event. It allows professionals to access the vehicle’s inside in real-time to detect any potential errors that could have serious consequences.

Conclusion

The core strength of computer vision is the high accuracy with which it can replace human vision if and when trained rightly. There are innumerable human-run processes in practice today, which can be replaced by computer vision and other artificial intelligence originated applications to eliminate mistakes resulting from human errors. It will not only save immense cost and time but will also optimize the outcomes dramatically.

From small startups to big names of the industry like Microsoft, Facebook, and Tesla, businesses are eager to explore and implement tech advancements like computer vision to improve all aspects of our lives. So, it’s about time we join hands in pulling everyone to a seamless adoption of computer vision and other AI-backed technologies for the collective betterment of humanity.